Category: Experiments

-

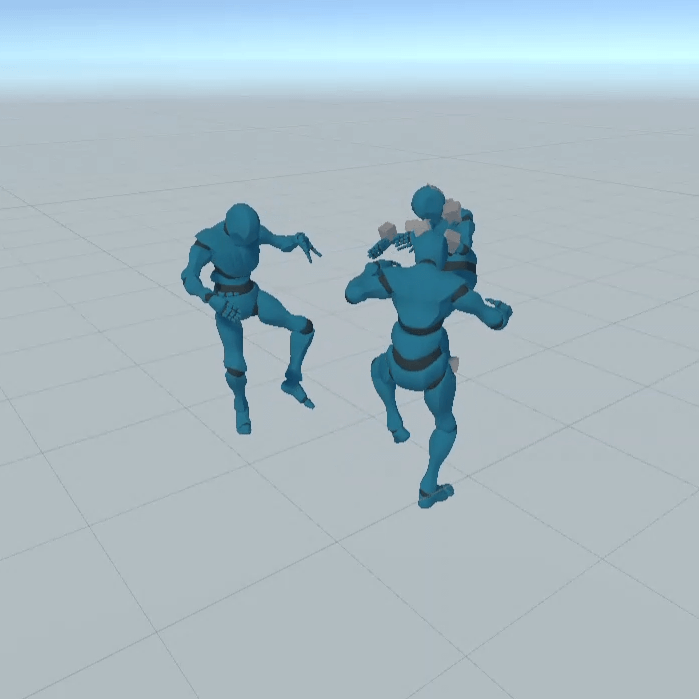

Virtual Reality Puppets

An ongoing research project into creating and puppeteering characters in VR.

-

Embodiment Research

Research into embodiment in VR, including but not limited to sharing your body with another person.

-

Old Projects

A collection of projects I did which are just fun to share.